The Uncanny Valley

Does Restaurant Tech Bridge the Gap?

Welcome to Words with Wynn! If this is your first time perusing my content and you’d like more of my weekly musings, subscribe below:

This week I’ve been thinking about the Uncanny Valley. For the uninformed, the Uncanny Valley is a psychological concept to describe the point at which human-like robots no longer give us the ‘heebie-jeebies’, and why.

Shown graphically (less interesting):

Moving farther along the spectrum of human likeness generally garners increased affinity up to a point. We’re indifferent to the industrial robot, it gets kinda cute when we slap googly eyes on it, it becomes massively off putting once it becomes a humanoid frankenstein foreman, then we love it as a drinking buddy when it’s indiscernible from the other guys on the line. The cause of the dip is debated, but generally the valley is created in that range between “somewhat human” and “fully human”, rapidly regaining affinity as the robot approaches the point at which it would be indistinguishable from a human (a la passing the Turing Test).

More tangibly (more interesting):

Humanoid:

Apptronik’s bot is humanoid in structure, but obviously robotic.

Our affinity increases with likeness (and supporting the University of Texas), and the cute little robot face elicits no feelings of uneasiness.

The tool can accomplish human feats and support its masters, but doesn’t unsettle us thanks to its distinct differentiation from us.

The Valley:

Not great, but not obviously perturbing; humanoid enough yet not despicably so.

Ex Machina (the latter photo) actively plays with the Uncanny Valley throughout the film, and it can be exemplified in the scene above as the robot examines the masks of previous models.

But the affinity continues to dissipate as near-human characteristics increase…

Deeply unsettling. In the ballpark of the human spark, yet somethin’ ain’t right.

Just peruse a poster of classic horror movie villains, and you’ll find that many of the most iconic are the most humanly grotesque…

The Return:

This idea is fundamental to the Bladerunner plots, wherein the androids are indiscernible from human counterparts.

The replicants have crossed the uncanny valley as perfect facsimiles, and therefore are no longer unsettling, and even empathy-inducing…

Except when the behaviors exemplified extend beyond human norms (lacking empathy as with the earlier replicant models or things like 360 head turning in other horror films).

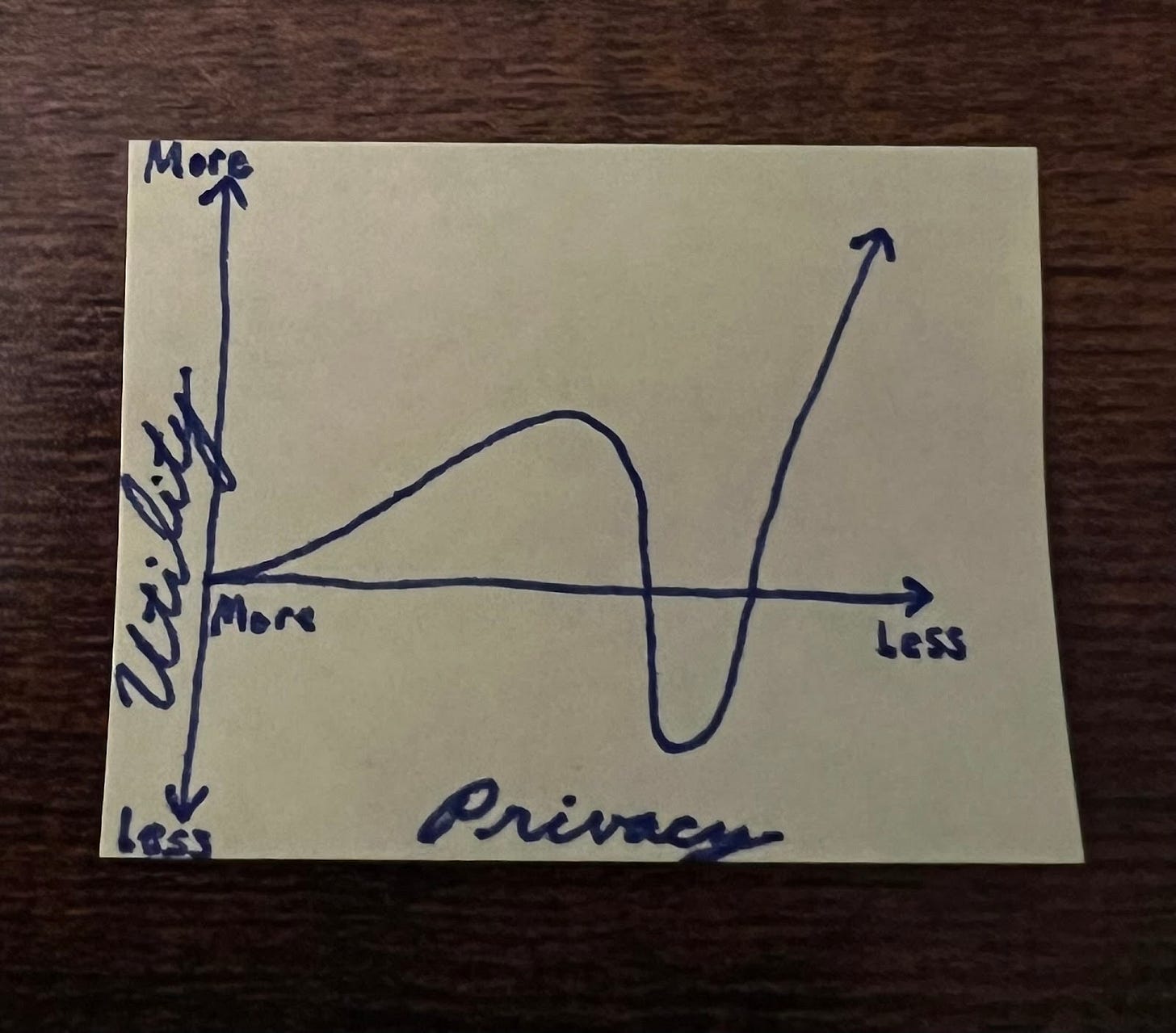

What got me mulling over the Uncanny Valley though was a recent RestaurantTech startup I’ve been helping (more on this later). Its technology and vision reminded me of a parallel reframing of the Uncanny Valley as it applies to technology and privacy. This idea is not original, but here’s the curve roughly as I see it:

The X Axis inversely represents Privacy, moving from more (left) to less (right)

The Y Axis represents a technology’s Utility to the consumer from less (down) to more (up)

The line itself tracks to our willingness to adopt a technology that compromises our sense of privacy

Simply, we will eagerly compromise small amounts of privacy for convenience/utility up to a point, after which our sentiment implodes as the technology feels “surveillance state”-esque and the utility feels like it shifts disproportionately from the consumer to the corporation/state/Zuckerberg. But this user sentiment eventually faces a reversion and exponential adoption once it crosses the valley and its utility vastly outweighs its costs in privacy. I think this idea can be interwoven with changes in consumer taste as well as broader technological adoption, but this simplified framework is informative.

Examples of this include:

Alexa, Google Home, and Other Audio-enabled Smart Home Features

To a man or woman out of the 1950’s, a globally connected 24/7 listening device planted directly in your most sacred and personal spaces would be an unthinkable invasion of privacy, even more incomprehensible that it would be adopted voluntarily… That is, until it became useful enough to answer all his or her questions on a whim, operate our lights to set a mood, change the temperature to increase comfort, and so much more. We haven’t seen the ubiquitous adoption with tech-challenged Baby Boomers quite yet, but the promise of an audio assistant to seamlessly empower an aging parent to reach their grandchild or call for help after a fall is a ‘no brainer’ trade for many.

Smart Phone GPS Technology

A similarly abrupt idea for the conspiratorially-minded: the government, Tim Cook, or any other slew of app developers will have easy, open, and constant access to your precise whereabouts within a city block or so at virtually all times. Shocking. Yet in 2023, you’re hard pressed to find a smartphone without these capabilities (in middle class America, at least).

Why? Well, the same idea prevailed. The convenience (utility) of everything that’s been developed leveraging this technology has reached a point where it vastly outweighs the obvious privacy concerns. Uber, UberEats, Google Maps, Dating Apps, geographically specific micro-recommendations, etc. have radically improved the quality of life for massive numbers of people, all at the cost of just a slightly massive amount of offline anonymity.

Aside: I understand that Apple has made moves to protect users’ privacy, but you get what I mean.

Personalized Health Data & ‘Quantified Self’ Tools

We’ve come to realize as a society, especially millennials, how powerful longitudinal health data can be and how utterly rubbish our current paradigm is. It’s challenging for a doctor to provide care on the basis of data points gathered periodically and irregularly (bloodwork once every 3 years?). By extension the Quantified Self movement has taken self-optimization to its logical end, leveraging emerging technologies to track and improve every aspect of our life that can be hooked to a wearable.

Collectively, a significant portion of the population has willingly, enthusiastically, elected to give away its most intimate data across multiple facets of life. Across Eight Sleep, Strava, Oura Rings, Whoop, and Apple Watches, every tech bro and his dog gladly provides the patterns of his life to third party algorithms to hyper examine and marginally optimize his existence.

These wearables are a prime example because their utility is so massive. A better night’s sleep each night for a year is a massive upgrade to one’s quality of life. The accountability of a running buddy in Strava can be life changing for one’s fitness. Regular health and vitals data could chart the course for improved standards of care delivered in more cost effective ways.

But these wonders aren’t without compromise. Your tin foil hat wearing Uncle might just be onto something when we consider the Ukranian conflict and a Russian Sub Commander getting picked off thanks to the Strava routes he shared..

Which all leads me back to the restaurant tech application that I’ve been considering:

Smart Restaurants of the Future

The team I’ve been supporting has built out an Internet of Things based offering that leverages a few small bluetooth enabled devices to provide restaurants with higher fidelity data of their customers, tracking foot traffic, drive-through velocity, customer regularity, and other insightful tidbits. As one imagines the user experience at a McDonalds of the future, that could include pulling into a drive-through lane and up to a smart menu that automatically adjusts to highlight a customer’s ‘regular’ orders and perhaps even delighting them with a surprise (“Hey Wynn! Thanks for being a loyal customer! Here’s a frosty!”). Intuitively, it’s easy to foresee why these kinds of small delights could be a major boon in driving loyalty and foot traffic in the hyper-competitive fast-casual dining space.

But providing a superior dining experience, aside from the quality of the underlying food itself, requires a deeper, clearer understanding of one’s customers, their preferences, and their behaviors than the competition. This has been the fundamental incentive for every brand to launch a loyalty program since the dawn of time, a trend which was only accelerated with the ubiquity of the internet and the ability to more closely link consumers with their actions. And the use cases of this information can be leveraged both ways: not only in delighting one’s acquired loyalty members, but also in driving newer customers into the funnel for loyalty programs and return visits. A virtuous cycle.

Of course, data that rich is, by definition, highly accurate as to your customer’s identity and behavior, and its utilization can travel across the spectrum of the Uncanny Valley parallel I drew above. From an interpersonal perspective, we could imagine restaurants where the staff is able to leverage this connectivity to build more intimate relationships with their customers. As you visit your local Wendy’s, the cashier gets a ping to their iPad that it’s your fourth visit this quarter and can greet you by name, offering you a little discount at checkout. But then there are other timelines where I cross the geofencing of the Wendy’s premises, the facility senses my phone’s bluetooth, and it drives an unsolicited push notification greeting Wynn Lemmons by name and asking why I haven’t been ordering more salads (which it suggested from gathering the presence of a health monitoring wearable on my wrist and hacking the blood pressure data it relayed). Similar uses, very different vibes.

I suppose this goes back to the ways in which the companies actually leverage the data, and whether it feels like a friendly Apptronik bot or a creepy iRobot humanoid. The crux of my contemplation is whether or not the average American consumer today will view these innovations as the former or the latter. I tend to think that the marginal utility will outweigh any misgivings from the average Joe, but I’m sure there will be armies of lobbyists employed to shape these consumer protections. Yet, underlying that use case dichotomy is the question of precisely how much of our lives we willingly disclose to our technological appendages in the first place.

For example, another startup that I recently encountered actually builds boxes for tracking foot traffic via bluetooth signals from wireless devices. This kind of device could be hugely valuable for security at festivals, optimizing urban planning based on heat mapped foot traffic, understanding customer engagement at retail activations, etc. But again this circles back to the Uncanny Valley of tech privacy. Are these compromises in privacy offset by the marginal societal or service gains extracted?

This has direct applications to some of our most pressing national questions as well. If hyper surveillance could massively reduce gun violence, is that an acceptable exploitation of our privacy? Societally, Americans have clearly voted no thus far, as we view systems like Social Credits in places like China as flagrantly unacceptable abuses of technology and power. Disregarding other aspects of the gordian knot that is the gun debate, this question of privacy will only continue to gain momentum as a wave of gun safety startups comes to market with privacy-invasive solutions ranging from smart guns tracking biometric data to live stream parsing AI for faster active shooter alerts (a more palatable flavor of strict citizen surveillance, depending on who you ask..).

The original application of the Uncanny Valley is to AI and humanoid robots, but the evolution of LLMs like ChatGPT are rapidly bringing us to a point in history that explores its original premise and my bastardized interpretation simultaneously. How will I feel when a chatbot responds to me with the same sass, diction, and inflections as I dish out to my friends? Once the entirety of my data, from my first text messages to long lost youtube videos, has been synthesized, will it bother me to converse with a rapidly-advancing facsimile of myself? And at what point will the utility outweigh the invasion of my privacy?

These are questions we’ll soon be contemplating. But for now I’m left to wonder, is it worth a free milkshake?

- 🍋