AI's GUI Moment

Software's Becoming Fluid

Welcome to Words with Wynn! If this is your first time perusing my content and you’d like more of my weekly musings, subscribe below:

Thinking about AI, two quotes keep swirling around my mind:

“Our job is not to see the future, it’s to see the present more clearly.” - Matt Cohler, Benchmark

“The future is already here, it’s just not evenly distributed.” - William Gibson

It’s apparent to anyone playing with these tools that AI is going to change the future. For most of us outside of the research sphere, this idea became obvious with the launch of ChatGPT a few years ago. The models are exponentially improving, the talent wars are raging, and the launch videos are hyping, yet for all the money poured down the AI funnel, it still feels a bit like agentic software is still in its Clippy era:

For the average normie, AI’s effects have largely been felt through:

Marginally improved search functionality - like Google with a bit better results

Better chatbots on corporate websites - many of which still provide nonanswers

AI slop content - that’s already brainwashing the most susceptible boomers

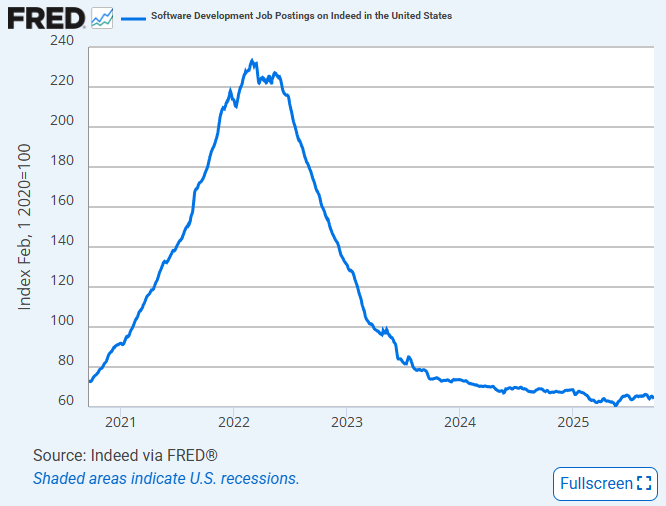

For those of us a bit deeper in the sauce, we’re starting to see its effects more clearly. Just ask anyone you know developing software:

Playing with some of the more agentic tools, I feel like I’m getting a very clear glimpse at the unfolding present. I certainly can’t see the future, but a couple avenues of the timeline that interest me are AI’s GUI moment and its first real hardware hit.

I was one of the judges for Jason Calacanis’s LAUNCH demo day a couple weeks ago, and I caught the tail end of a talk given by Superhuman cofounder Vivek Sodera. He was outlining a broad thesis that the future of software is going to be far more fluid. The idea is that our interactions with computers are about to change radically through a more amorphous kind of ‘just in time’ software experience. LLMs will produce bespoke or tailored software solutions as the need arises, then compartmentalize the programs in a way that’s very different from our laborious download+click+search+type+etc. workflow of today. I’d had inklings of this idea percolating, but his talk helped to firm up some of my thoughts. Bear with me though, as they’re still a bit gelatinous.

It made me think of computing’s GUI moment.

In the nascent days of software, computers were accessed through a combination of command prompts and coding, where the user was essentially typing very specific functions into a computer to output the desired actions.

This process was extremely high friction. It required specialized knowledge in order to invoke basic actions from a computer, which was unintuitive and cumbersome. Utilizing a computer in the pre-GUI era was essentially coding in its rawest form, inputting the specific commands, step-by-step, that we now wrap into even the most basic software today. The slightest syntax error could torpedo your entire workflow. It was a clear problem that early technologists had set out to fix.

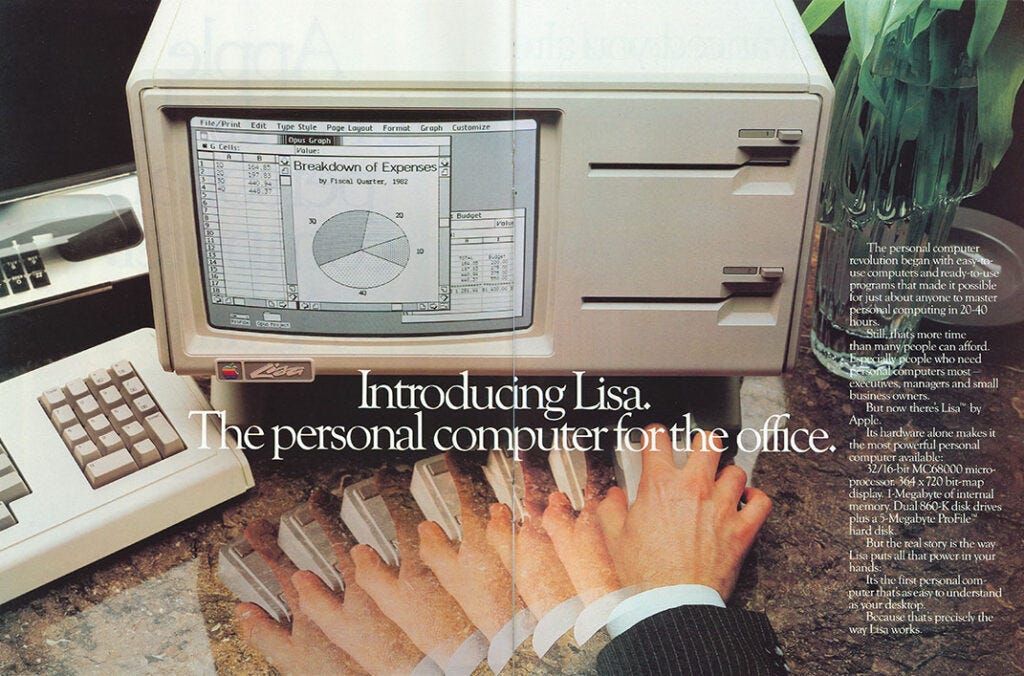

Famously, Xerox PARC, the R&D hub of the early computing pioneer, invented a version of the graphical user interface. It was comprised of a point-and-click functionality that allowed users to command their computer in a more intuitive way, utilizing a mouse and visual representations of files, functions, and programs. This was a breakthrough in user experience, but the stodgy tech titan lacked the vision to really commercialize it.

Through one of fate’s great chance encounters, Steve Jobs was shown a demo of the interface while touring the Xerox campus and immediately grasped the magnitude of what they had invented. He would later say that it was like a veil had been lifted for him, and that Xerox could have owned the entire computer industry had they realized their brilliance. Apple quickly rolled this functionality into their Lisa product, and helped to birth the user interface that defines computing today.

The Graphical User Interface made interacting with technology so simple your grandma could do it. It was more intuitive, more natural, and more accessible in a way that broadened the addressable audience for computers by an order of magnitude. In conjunction with more powerful chips and a burgeoning software development industry, the GUI helped to power the computer’s broader adoption as a business tool in corporate America. The revolution had begun.

I think that artificial intelligence may be the next turning of this screw. There’s a direct impact on software itself, then there’s the broader redefining of computer interfacing as a whole.

For the former, I feel like vibe coding has given me a taste of this narrowly distributed future. These tools are accessible, but not widely known, and limited by the distribution of the startups themselves (though this is growing exponentially). The way that basic interactions with the computers of the 70’s required a nuanced understanding of command line prompting, software development today has been a gatekept profession. In order to speak computer in 2010, you still needed to know a coding language of some sort (C++, Java, etc.). The friction of learning this skillset helped to create a hacker class that was extremely well compensated for its expertise. Computers and the internet created infinite leverage, but it was a select few within the broader population that could really run with this force multiplier. Then came no-code tools as an attempt to close that gap: a generation of simplified software that could empower the normies. This went a long way, but didn’t quite blow off the doors the way agentic coding has…

Now your grandma can create an alarm clock app with natural language. No coding, no hosting, no complex back end. Replit’s got her covered. Software will become content (a drum I keep beating), and the accompanying economics will rapidly degrade.

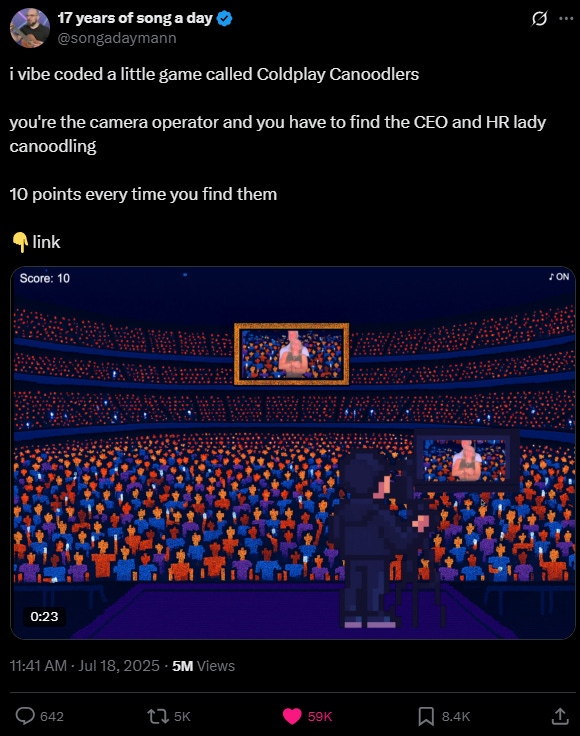

Remember the Coldplay Kiss Cam scandal? A guy vibe coded that into a game within a day:

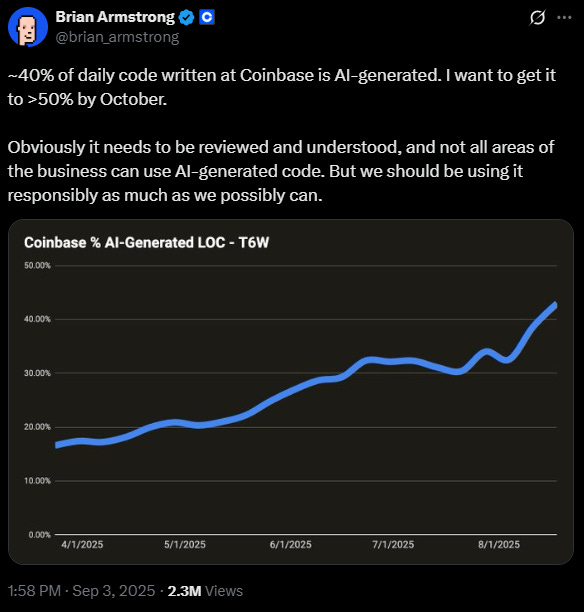

And this extends beyond memetic games. The nature of industrial-grade software development is transforming as well:

One exceptional software architect with an army of agents can ship with the veracity of a dozen Comp. Sci. interns. Software development isn’t immune to power laws.

This upheaval also interplays with the next generation of AI-native programs and the operating systems that will access them.

Currently, most of our AI-based interactions happen via an intermediary native to the program or through a third party app itself. When I use AI, I’m going to the actual ChatGPT site or I’m interacting with the Help Bot on the Chase page. These are interesting and have their clear use cases, but I think the next leg up could be a far more dramatic reimagining of software.

A stupid example:

I have a long standing beef with the fact that neither Google Docs nor Google Sheets have a native Dark Mode. It’s 2025, we’re inventing god intelligences to annihilate humanity in the next few years, and Google still hasn’t added these basic features. Wild. This is an imminently solvable problem. Yes, I know chrome extensions like Dark Reader exist, but in our imagined future this fix would be merely a prompt away.

Where that sits in the stack, I’m still unsure, but there’s a few ways it could play:

App level: “Hey Gemini? You’re scorching my corneas. Please implement dark mode into this web page for me and add a button at the end of the toolbar to toggle this feature.”

YC has famously said “Make something people want.” What if they could make it themselves within your software? No more help tickets and feature requests… Software could be built to give your users the reins, empowering decentralized product development while also driving tighter feedback cycles, enabling builders to push some amount of their roadmap ideation directly into the hands of users. Or perhaps it’s a world where the software that each of us uses is as customized as the highly personalized feeds that dominate our social media platforms.

Browser level: “Hey Chrome, as I surf the web 🏄♀️, please keep my precious peepers from getting sauteed. Any web page that has primarily white background features or light/bright colors dominating the field of view, please flip to be inverted (dark mode). Do not perform this color inversion on images, icons, or logos on the pages themselves.”

Of course this is far out today (and prohibitively expensive in terms of compute, I’m sure), but it might not be an unreasonable future. Though, the first two examples above still feel a bit skeuomorphic. They’re merely overlaying kinda new ideas on top of legacy tech.

More radical reimaginings could manifest at the operating system or hardware levels.

Back to the GUI idea, the entire interface with our laptop should be able to evolve. Operating systems will soon host native agents (think Microsoft’s Clippy as your laptop copilot) as well as advanced voice processing software. One of the breakthroughs with LLMs is natural language processing that could soon allow us to navigate our computers at the speed of sound, dictating commands as they come to mind. You could even bolster its effectiveness by leveraging something like the cutting edge eye tracking capabilities that Apple honed while developing the Vision Pro. A more capable computer could be reading your eye movements, tracking your voice, and whizzing away through emails like never before (the most depressing use case possible).

Think about it- Why the hell am I clicking into Twitter, clicking Tweet, typing my thought, then clicking the upload button, navigating 10 folders, finding the meme I’d like to add, then uploading it, then clicking send?

Typing the eight steps it took me to post that shitty meme above already makes it feel like an incredibly archaic workflow. The way VC’s dictate ideas to their ghostwriters, I ought to be able to have my Clippy whip it up in a fraction of the time.

Then I take this to its logical conclusion, which is a reinvention of the hardware itself. Models today are massive, so a lot of the AI-native hardware experiments like Friend or Limitless are really more like edge nodes, with the heavy compute being performed elsewhere. This may not always be the case. Models will continue to get better, and eventually they will get smaller and more efficient as architecture gets optimized and additional breakthroughs occur. Additionally, if Moore’s law can continue its steady march forward, these forces will converge to produce incredibly powerful agents in your pocket.

We’re already seeing tech giants like Meta going all in on their estimation that the smart phone will eventually be supplanted by a more intuitive form factor:

Mark Zuckerberg certainly seems to believe that it’s some kind of headwear. An agent in your pocket will lack the kind of rich context that a camera at eye level will be able to ingest. Plus, a heads up display in your field of view may prove to have more utility than pulling out your phone every thirty seconds. Other tech giants share this thinking, with players like Anduril going deep on AR/XR technology for the warfighter with their Eagle Eye augmentation platform. Palmer Lucky’s looking to create American Technomancers and envisions a future where “…you’re going to see a head-mounted display of some kind on basically every single head in the DoD.” (Core Memory). If the hardware can get small, light, and powerful enough, the aperture for how we infuse our life with technology will dramatically change.

Of course, this phase shift won’t be all or nothing, and no change happens overnight. For all my enthusiasm, I’ve dramatically oversimplified quite a bit of the messy middle ground between an idealized future and chatbots spewing corporate slop today. Even the most tech forward companies are seeing their AI implementations underwhelm.

That said, these evolutions feel inevitable. The GUI was a step function change for our relationship with software. Its effect was profound because it abstracted away the dull and dirty parts of navigating technology. Commoditized intelligence has the opportunity to abstract away so much more in ways that we’re only beginning to glimpse today. The Clippys of the past were a meme for their friction, but the Cortanas of the future might just be the pilots we need.

- 🍋